How to replatform Endeca rules to Elasticsearch

Feb 18, 2020 • 7 min read

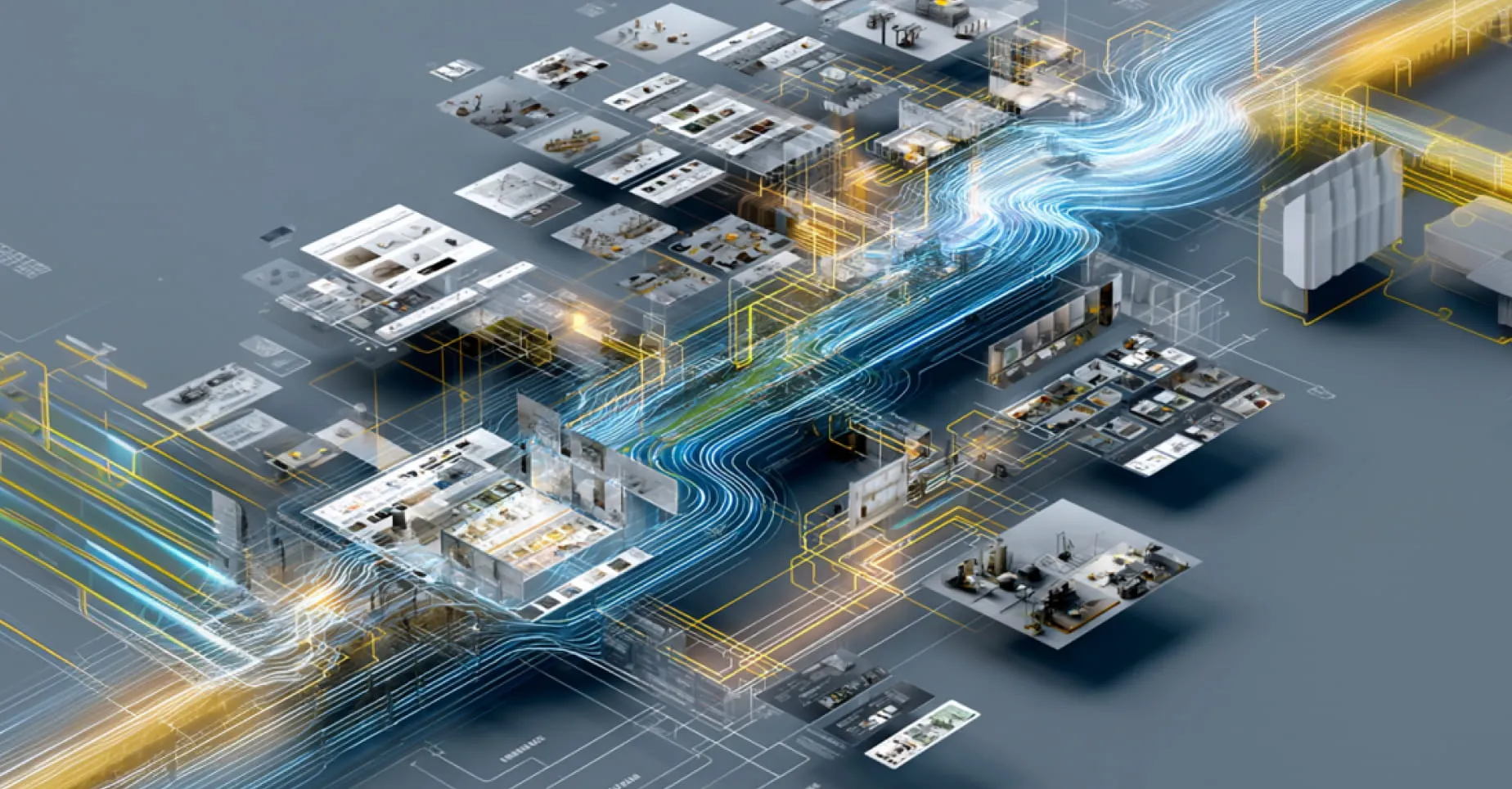

Merchandising is an essential part of modern online retail. Automated merchandising platforms allow business users to control search and browse results by affecting product ranking decisions, providing curated content and referring customers to relevant categories or thematic landing pages.

Oracle Endeca Information Discovery, once a popular product discovery platform, provided a comprehensive set of business rules to support key merchandising use cases. However, these days Endeca is a stagnating technology and many firms are looking to replatform to alternative open source solutions.

At Grid Dynamics, we have performed several Endeca migration projects with Tier-1 online retailers, and summarised our solutions in the Endeca migration blueprint. Support of Endeca business rules is always an important item on the roadmap. In this post we will share a few details about migration and support for Endeca business rules.

Endeca business rules

Conceptually, the Endeca business rule consists of two parts: trigger and action. A trigger is used for rule targeting, e.g. it defines a condition in which a business rule has to be activated, or fired.

Typical examples of triggers include the situation when a search phrase contains specific words, or some set of filters is applied by the user. A rule can have several triggers, any of which have to be satisfied for the rule to fire.

When a rule is fired, it performs actions. This is when a merchandiser has an opportunity to affect customer experience and tune it to her liking. Here are examples of such actions:

- redirect to a special page, e.g. to delivery options if the user has searched for “delivery”

- facets selection, e.g. show waist and inseam facets when the customer is searching for “pants” or “jeans”

- some products are boosted to reflect special promotions or are high-margin products

- some facet values are boosted, e.g. promoting retailer’s own brand to the first place

- show a specific banner

You can find more details in Endeca Rules Manager documentation.

An overview of the Endeca rules structure

Let’s consider the structure of Endeca rules in more details. As an example, we are going to look at the rule type called Keyword Redirect: a rule which redirects the user to a predefined page when the user’s search phrase matches a particular set of words (called “search terms”).

Endeca supports 3 types of keyword match:

-

Match phrase: the search phrase contains search terms sequentially in a strict order but may also contain other words before or after.

Example:

The rule is configured with search terms = “how to”. The search phrase “how to make an order” will trigger this rule. At the same time the search phrase “how can I get to the store” will not trigger the rule. - Match all: the search phrase contains all search terms in any order with optional additional words in any position.

Example:

The rule is configured with search terms = “oven best pizza”. The search phrase “what is the best oven for cooking pizza” will trigger this rule. -

Match exact: the rule will be triggered only and only when the search phrase is exactly equal to search terms. No additional words allowed.

Example:

The rule is configured with search terms “order status”. Only the search phrase “order status” will trigger this rule, any other will not.

Let’s consider how to replatform this type of rules to Elasticsearch – a popular open source search engine based on Lucene.

Elasticsearch contains a powerful percolator feature which helps to support these three match types.

Indexing Endeca rules

We will deal with the problem of triggering Endeca rules as a search problem. In a large-scale system there can be thousands, or even tens of thousands of rules, and we don’t want to evaluate each rule trigger on every request to check if the rule should fire.

Second, we should support fuzzy matching of keyword-triggered rules with respect to synonyms and spelling correction, so we can still show facets for jeans if a customer types “jeanz”.

So, we will model the rules as a separate Elasticsearch index with nested documents where the rule will be a parent document and its triggers will be children documents. The rule document will contain a list of actions. In our simplified case this field will contain a URL to the page where the user will be redirected. Each trigger has a field with a configured search term, let’s call it a “phrase”.

We have to evaluate all the triggers against a customer phrase and if a trigger is matching, we will return a corresponding parent rule into the result list of fired rules. However, we cannot just search for a customer’s phrase within the trigger’s phrase field. This way, a customer’s phrase “how to make an order” will not match a trigger’s phrase field value: “how to”. Of course, we can tokenize and analyze the customer query and carefully check whenever all words matched. However, it quickly becomes really cumbersome and slow.

What we actually need is an inverted search. Instead of searching for parts of the search phrase in the trigger, we should be searching for all the triggers within the search phrase. So, instead of searching for a search phrase within the index of all triggers, we are creating a temporary index containing a single document with the search phrase text and running all trigger phrases against this index checking if our single document matches each trigger.

Sounds complicated? Luckily, Elasticsearch has a special module called “percolator” that turns a problem of inverted search into a piece of cake. This module consists of a special field type called “percolator” that provides an ability to store queries and a special “percolate” query. Percolate query takes a document as an argument and returns all queries this document matches (See how it works).

Let’s look at an example

Let’s get our hands dirty and look at the example below. Ensure that you have a fresh version of Elasticsearch running (mine is 7.5.1). I also suggest you install and run Kibana of the same version: all requests below are formatted to be used in this application.

First, let’s create an index for rules:

PUT /rules

{

"mappings": {

"properties": {

"action" : {

"type": "keyword"

},

"trigger" : {

"type" : "nested",

"properties": {

"query": {

"type": "percolator"

}

}

},

"phrase": {

"type": "text",

"fields": {

"raw": {

"type": "keyword"

}

}

}

}

}

}Let’s see what is going on here:

- We create an index called “rules”

- Every rule has a field “action” which is going to contain a URL. The field type is “keyword” – since action data is just a payload, there is no need to analyze its value.

-

The rule also has nested object(s) – “trigger”:

a) “query” is a special field with type “percolator” where we are going to keep some query representing the match type.

b) “phrase” contains words used for “query” configuration. This field will also be used as part of a document in query time. Note that the “phrase” field is indexed in two variations: “text” (analysed and tokenized) and “keyword”. Both types are necessary for different queries.

Now let’s index some rules.

The first rule has a trigger with a “match phrase” type. Elastic’s “match_phrase” query is the best for this purpose:

PUT /rules/_doc/1?refresh

{

"action" : "http://retailername.com/FAQ",

"trigger" : {

"query" : {

"match_phrase" : {

"phrase" : "how to"

}

}

}

}The second rule has a trigger with a “match exact” type. Simple “term” query is perfectly suitable for such a case.

Please pay attention to the fact that term query doesn’t perform any analysis of a value passed to it. Make sure that either a phrase you pass in query time is already analyzed or configure some analyzer for “phrase.raw” field.

PUT /rules/_doc/2?refresh

{

"action" : "http://retailername.com/orders",

"trigger" : {

"query" : {

"term" : {

"phrase.raw" : "order status"

}

}

}

}The last rule has a trigger with a “match all” type. Elastic’s “match” query is used with “AND” operator.

PUT /rules/_doc/3?refresh

{

"action" : "http://retailername.com/top10ovens",

"trigger" : {

"query" : {

"match" : {

"phrase" : {

"query" : "oven best pizza",

"operator" : "AND"

}

}

}

}

}Now we are ready for some searching!

We are going to test our little rules engine with three search phrases:

- how to make an order

- order status

- what is the best oven for cooking pizza

This is what a rules search request looks like:

GET /rules/_search

{

"query": {

"nested" : {

"path" : "trigger",

"query" : {

"percolate" : {

"field" : "trigger.query",

"document" : {

"phrase" : "search phrase"

}

}

},

"score_mode" : "max"

}

},

"_source": "action"

}- The base of the request is “percolate” query:

- We pass a field name where searchable queries are stored. In our case this field is “trigger.query”.

- We also pass a simple document with one field “phrase”. Its value is the user’s search phrase.

- “Percolate” query will return only triggers, but the necessary action – URL – is in rules. So the “percolate” query is wrapped into a “nested” query which returns parent objects by matching children. In our case the “nested” query will return rules by matching triggers.

Let’s test the first phrase “how to make an order”:

GET /rules/_search

{

"query": {

"nested" : {

"path" : "trigger",

"query" : {

"percolate" : {

"field" : "trigger.query",

"document" : {

"phrase" : "how to make an order"

}

}

},

"score_mode" : "max"

}

},

"_source": "action"

}The response will look like:

"hits" : {

"total" : {

"value" : 1,

"relation" : "eq"

},

"max_score" : 0.26152915,

"hits" : [

{

"_index" : "rules",

"_type" : "_doc",

"_id" : "1",

"_score" : 0.26152915,

"_source" : {

"action" : "http://retailername.com/FAQ"

}

}

]

}The rule with “_id” = “1” was returned, as expected.

Now we are going to test the second phrase “order status”:

GET /rules/_search

{

"query": {

"nested" : {

"path" : "trigger",

"query" : {

"percolate" : {

"field" : "trigger.query",

"document" : {

"phrase" : "order status"

}

}

},

"score_mode" : "max"

}

},

"_source": "action"

}The response looks like:

"hits" : {

"total" : {

"value" : 1,

"relation" : "eq"

},

"max_score" : 0.13076457,

"hits" : [

{

"_index" : "rules",

"_type" : "_doc",

"_id" : "2",

"_score" : 0.13076457,

"_source" : {

"action" : "http://retailername.com/order"

}

}

]

}

Which means that the rule with “_id” = “2” was matched as expected by the percolator.

The last phrase “what is the best oven for cooking pizza”:

GET /rules/_search

{

"query": {

"nested" : {

"path" : "trigger",

"query" : {

"percolate" : {

"field" : "trigger.query",

"document" : {

"phrase" : "what is the best oven for cooking pizza"

}

}

},

"score_mode" : "max"

}

},

"_source": "action"

}Returns the rule with “_id” = “3” as expected:

"hits" : {

"total" : {

"value" : 1,

"relation" : "eq"

},

"max_score" : 0.39229372,

"hits" : [

{

"_index" : "rules",

"_type" : "_doc",

"_id" : "3",

"_score" : 0.39229372,

"_source" : {

"action" : "http://retailername.com/top10ovens"

}

}

]

}As you can see, we just created basic support for one of the key types of Endeca rules using Elasticsearch percolator. Of course, the real-life solution has to be a bit more complicated and support things like fuzzy matching and synonym expansion, yet it illustrates the approach well enough. We can support other kinds of Endeca rules in a similar manner.

What about Solr?

In the history of our Endeca replatforming project, we often had to support this kind of trigger matching in Solr which required quite a sophisticated code in the absence of percolator (at the time of writing). However, an ability to match documents against queries was recently merged to Lucene. We expect that percolator will appear in Solr soon and we will update this blog post with a Solr-based solution.

Conclusion

In this blog post we described one of the significant problems of most Endeca replatforming projects – support for Endeca-like merchandising rules. As it turns out, it can be solved really nicely with the Elasticsearch percolator module.

We will continue sharing our Endeca migration experience in other blog posts.

Stay tuned and happy searching!

If you’d like to get more information on this subject or to contact the authors, please click here.

Tags

You might also like

Once upon a time, your enterprise product catalog was a backend concern. A necessary system of record. Something teams updated quietly while the real “experience” work happened elsewhere. Today, that separation no longer exists. Research shows that 87% of shoppers rate product data as “extremely...

Modern enterprises increasingly rely on deep learning to power mission-critical workflows such as global demand forecasting, inventory optimization, supply chain prediction, video-based defect detection, and financial risk modeling. These workloads demonstrate rapidly increasing GPU requirements, g...

Predictive analytics is undergoing a major transformation. This AI demand forecasting model comparison reveals significant performance gaps between traditional and modern approaches. Demand forecasting has long guided decisions in retail and manufacturing, but today’s data volumes and volatility ar...

Agentic commerce is in the midst of a defining moment. Instead of a customer navigating a checkout flow, AI shopping agents can now autonomously purchase goods, renew subscriptions, or restock supplies, executing payments entirely on the customer’s behalf through agentic payments protocols. It’s...

You know the feeling: you walk into a store only to find out that the product you saw online is out of stock! This is one of the most common and problematic experiences for customers who shop multichannel retail. The problem for you? Disconnected sales channels, lost income, frustrated custom...

The buzzword “composable commerce” has dominated digital strategy conversations since Gartner popularized the term in 2020. But behind the marketing hype lies a longstanding, proven practice of integrating specialized, best-of-breed technology components into a flexible and scalable ecosystem....

For many businesses, moving away from familiar but inherently unadaptable legacy suites is challenging. However, eliminating this technical debt one step at a time can bolster your confidence. The best starting point is transitioning from a monolithic CMS to a headless CMS. This shift to a modern c...