Leveraging Apache Kafka and GraphQL to build unified integrations in a composable commerce ecosystem

Sep 05, 2023 • 11 min read

- Peer-to-peer connections: Common approach for building composable commerce solutions

- Introducing a unified integration approach

- Unified integration approach benefits

- Introducing the Kafka for composable commerce starter kit

- Embrace the unified integration approach for future-proof composable commerce solutions

- Appendix

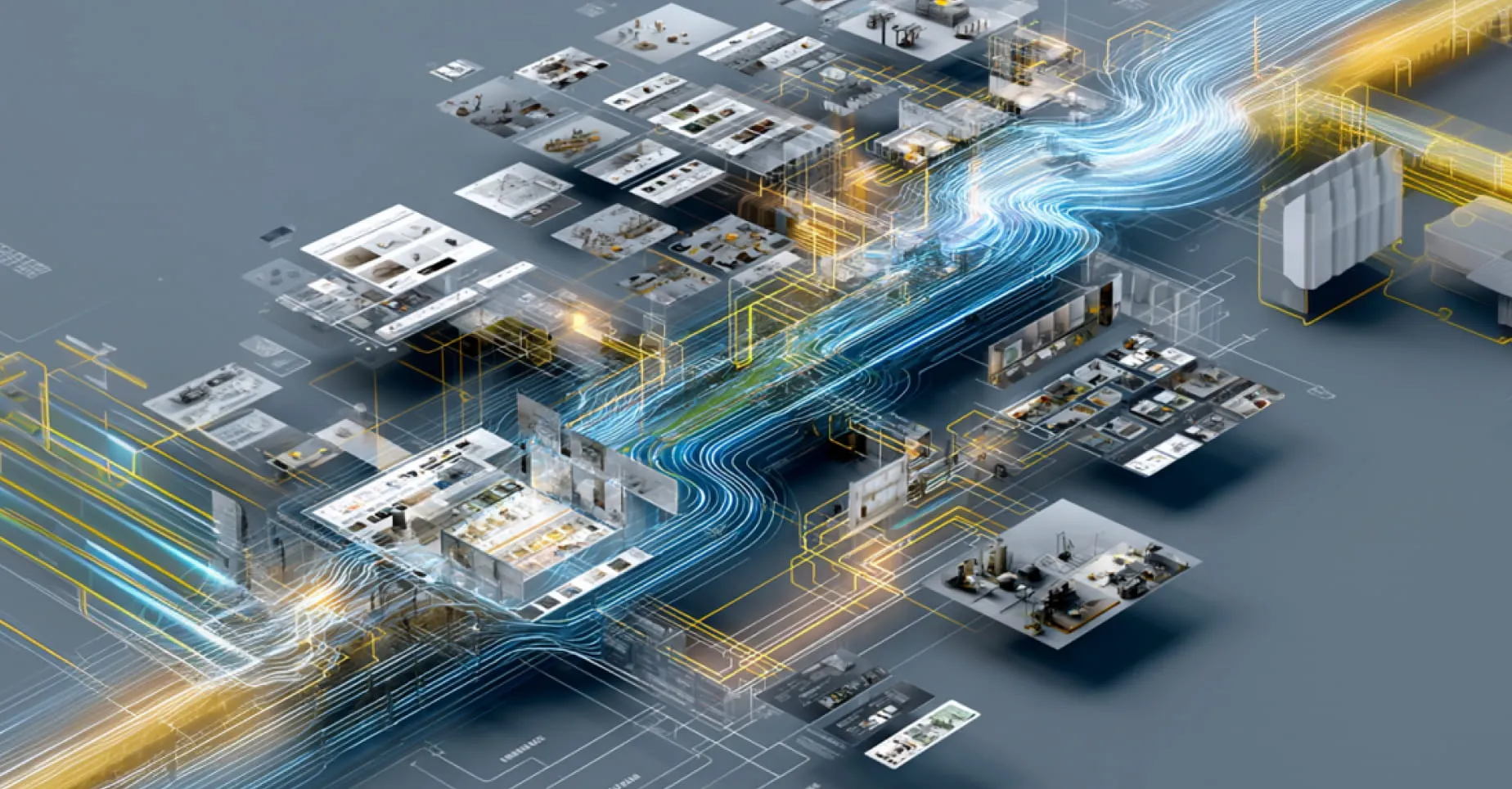

While composable commerce unlocks a flexible and scalable technology ecosystem to build digital solutions that meet unique business needs, the biggest differentiator among different composable ecosystems is how various packaged business capabilities (PBCs) are seamlessly integrated to ensure harmonious communication. The common integration approach uses pre-built connectors to integrate PBCs, whereas enterprises need an advanced integration approach to address evolving business challenges. Fortunately, we can develop a unified integration approach that encapsulates the complexity of PBC integrations, enabling streamlined interactions between different systems using two critical layers:

- A messaging platform based on Apache Kafka serving as a glue between PBCs

- GraphQL-powered API Facade establishing an abstract cover that hides PBC details from end-users

Let’s explore the mechanics of the common integration approach, examine the challenges it presents, and delve into how our revolutionary unified integration approach redefines the way composable commerce ecosystems are managed, integrated, and scaled.

Peer-to-peer connections: Common approach for building composable commerce solutions

The common approach for building composable commerce solutions involves utilizing peer-to-peer integrations between various PBCs. In this approach, the focus is on establishing direct connections between the different systems through pre-built connectors or APIs. Vendors provide a wide range of pre-existing connectors for PBCs. By using these connectors businesses can seamlessly integrate different components with ease. Consequently, the creation of a robust and tailored digital commerce ecosystem becomes a matter of configuration rather than extensive development.

Challenges of peer-to-peer connections

While peer-to-peer integrations offer numerous benefits, it is also essential to consider their potential limitations and challenges in the implementation process.

- Complexity with increased connections: As the number of integrated PBCs grows, managing peer-to-peer connections can become complex, necessitating a strong governance framework and monitoring mechanisms for smooth operations.

- Diverse data structures: Each PBC might have its unique data structure and modeling conventions. Integrating these systems requires meticulous data mapping and alignment, introducing extra complexity to the integration process.

- Challenges for large enterprises: Adopting peer-to-peer integration can be more challenging for large enterprises with established corporate-wide information architectures. Integrating multiple PBCs into existing infrastructure demands careful consideration and planning to navigate complexities effectively.

- Dispersed client model: In a dispersed client model, different parts of the client view are spread across various PBCs and systems. This dispersion complicates updates, requiring data synchronization across systems to maintain consistency. Retrieving a complete domain data view requires querying multiple systems independently, manually aggregating data, and collating results. This can lead to complexity, data inconsistencies, and potential errors.

As the number of integrated PBCs increases, managing and maintaining peer-to-peer connections can become complex. It requires a robust governance framework and monitoring mechanisms to ensure smooth operations. Each system may have its own unique data structure and modeling conventions.

Integrating these systems requires careful mapping and alignment of data models, which can introduce additional complexity to the integration process. The adoption of the peer-to-peer integration approach is more challenging for large enterprises with mature and corporate-wide information architectures. Integrating numerous PBCs into an existing infrastructure can introduce complexities that require careful consideration and planning.

Another challenge arises from the dispersed client model, where different parts of the client model are scattered across various PBCs and systems. This dispersion makes it hard for clients to orchestrate updates effectively, as they must synchronize data across multiple systems to maintain a consistent client view. Additionally, when clients need to retrieve a complete view of their domain data, they have to query multiple systems independently, collate the results, and manually aggregate the data, leading to increased complexity, potential data inconsistencies, and higher chances of errors.

Enterprise domain models vs. PBC models

One of the significant challenges faced by large enterprises in the peer-to-peer integration approach is the need to map their own data models to the data models provided by the PBC vendors. In a classic composable solution, data models may exist independently of corporate information models, creating a scenario where these two worlds operate in parallel, which leads to the following implications:

- Data inconsistency: The existence of parallel data models can lead to data inconsistency and discrepancies between the corporate information models and PBC data models. This inconsistency may result in errors, inaccuracies, or misinterpretation of data, affecting decision-making processes and overall business operations.

- Complex data mapping: Mapping data between different models can be a complex and error-prone task. It may require custom transformations and data mapping processes to ensure seamless integration between the corporate data models and the data models of individual PBCs. This complexity can cause delays in integration and hinder the agility of the composable commerce ecosystem.

- Integration challenges: Integrating systems with disparate data models can present integration challenges, especially when attempting to achieve a holistic view of data across the organization. This can lead to difficulties in aggregating and analyzing data from multiple sources, hindering the ability to gain valuable insights and a comprehensive understanding of the business.

- Maintenance and upgrades: When corporate information models and PBC data models diverge, maintenance and upgrades of the integration solution become more complex. Keeping the data mappings up-to-date and synchronized can be time-consuming, especially when PBCs undergo updates or new PBCs are integrated into the ecosystem.

Introducing a unified integration approach

To address the concerns and challenges associated with peer-to-peer integrations, our unified integration approach provides a robust solution. It involves the implementation of two critical layers: the messaging platform and the API facade. These two layers serve as the backbone of the composable commerce ecosystem. By encapsulating the complexity of PBC integration, they enable a streamlined interaction between clients and create a distributed e-commerce system.

Messaging platform: Ensuring communication between integrated systems

The messaging platform is the heart of the unified approach, acting as a data bus or orchestration hub, where each system connects to retrieve data and interact with other integrated systems. This smart layer becomes the central point for data exchange, ensuring consistency and facilitating seamless integration. This platform might resemble an enterprise service bus (ESB) at first glance, but the key distinction lies in its exceptional flexibility. In the ESB architecture, the integration logic for data transformation, mapping, and routing is typically dictated by the centralized bus, making it less adaptable to evolving business needs.

However, the unified approach embraces a core mantra of event-driven services—centralizing an immutable stream of data while decentralizing the freedom to act, adapt, and change. This flexibility is further enhanced by the fact that each service has the freedom to interpret and utilize the events as needed, making decisions based on its specific context and requirements. The integration logic is distributed across the ecosystem, empowering services to evolve independently without relying on a monolithic ESB.

The smart integration layer is fundamentally built on an event-driven architecture. The architecture consists of PBC-specific connectors, domain-specific topics, and consolidated domain object views, all working in harmony to create a powerful and efficient composable commerce ecosystem. This ecosystem enables the utilization of message-based communication to ensure seamless integration across the composable commerce ecosystem.

API facade: Streamlining client engagement

To enable a more frictionless and controlled interaction between clients and the composable commerce ecosystem, an intelligent facade layer is introduced. It shields clients from the underlying complexities of the PBCs, their specific data models, and the Kafka messaging platform, providing a simplified and standardized way to interact with their domain objects.

Clients can query the specific data they need without having to understand the intricacies of the PBCs or the messaging platform. Instead of making direct requests to individual PBCs or the Kafka platform, clients can submit their queries through the API facade layer, which fetches and consolidates the relevant data.

Exploring technologies and data modeling with a unified integration approach

As a leader in distributed data storage systems and event-driven architectures, Apache Kafka is the technology of choice for the messaging platform. Leveraging Kafka as the messaging platform, our integrated approach benefits from its scalability, fault tolerance, and ability to handle large volumes of data. The message-driven nature of Kafka allows for event-based communication, enabling immediate data updates and real-time synchronization among integrated systems. This empowers businesses to respond to events promptly and maintain data consistency across the entire composable commerce ecosystem.

The unified integration approach leverages Kafka Connectors, Webhooks, or custom applications to establish seamless communication between the senders and receivers. These connectors establish a communication layer that plays a vital role in bridging the gap between diverse systems, allowing them to read from and write to Kafka Topics efficiently. This flexible layer enables businesses to integrate with various systems and PBCs, promoting adaptability and extensibility as the ecosystem evolves.

When it comes to the API facade, GraphQL emerges as a robust contender. It provides exceptional flexibility, making it well-suited for diverse clients, including complex systems and microservices-based architectures. Moreover, GraphQL empowers clients to define the data they need, enabling precise field-specific requests.

This eliminates the problem of over-fetching, where clients receive more data than necessary, subsequently optimizing bandwidth and improving performance. And regardless of the number of underlying systems and PBCs, clients can interact with the “centralized ecosystem” through a standardized GraphQL API, simplifying client integration and enhancing consistency.

A generic domain model is used as an intermediary to enable seamless data exchange and interaction among various PBCs. This generic model acts as a common language or format that allows PBCs to save and extract data. It establishes a standardized data representation, ensuring consistent interactions and reducing the need for extensive mapping and transformation efforts.

Business flow in the unified approach: API facade and Kafka interaction

In the unified approach, the business flow revolves around two key layers: the API facade and the messaging platform (Kafka, in our case). The business flow begins with clients interacting with their domain model through the GraphQL API facade. Clients engage by submitting data updates through GraphQL mutations, encompassing tasks like creating new orders, updating customer details, or modifying product specifics. Upon receiving the data updates from clients, the GraphQL API facade orchestrates the propagation of these updates to the relevant PBCs.

Each PBC processes its respective update based on its specific domain and functionality. For example, a product update might trigger updates in the Product Information System PBC. After processing the data updates, each PBC publishes the corresponding events on the Kafka messaging platform. These events represent the changes made to the domain objects within each PBC.

The messaging platform stores and aggregates these events into Kafka Materialized Views. When clients require domain data retrieval—like fetching order history, product details, or customer profiles—they again initiate data queries through the GraphQL API facade. The API facade, acting as a unified entry point, forwards the client data queries to the Kafka messaging platform. The messaging platform leverages Kafka Materialized Views, offering an aggregated and unified domain data view from various PBCs.

Navigating the foundational information architecture

The generic domain model serves as a foundational data structure within the unified integration solution, facilitating seamless data exchange and interaction among the integrated systems and PBCs. This model is designed to accommodate a wide range of data types and is built to support the dynamic needs of the composable commerce ecosystem.

It consists of three key components:

- Data updates: Data updates form the core of the generic domain model. They represent the actual information exchanged, updated, or processed across the integrated systems. This can include various types of data, such as customer information, product details, order status, inventory updates, and more.

- Context: The context provides additional information relevant for implementing data updates within the integrated systems. It includes data that helps the systems understand the purpose, origin, and intent of every data update.

- Properties: The properties of generic domain models offer additional metadata that aids in the processing of data updates. These properties provide essential details that influence how the data is consumed, transformed, or stored within the composable commerce ecosystem.

Data migration strategy

Migrating from a peer-to-peer integration approach to the unified integration solution requires careful planning and execution to ensure a seamless transition of data and functionalities. The data migration tool serves as an intermediary that extracts data from different systems or databases, referred to as the source systems, and batches it into the Kafka messaging platform. This smooth migration of data becomes the foundation for a unified and scalable composable commerce ecosystem.

Error handling and monitoring

Robust error handling and monitoring become imperative within the unified integration approach, ensuring the reliability and resilience of the composable commerce ecosystem. While the Dead Letter Queue Approach serves as a powerful mechanism for handling and managing erroneous or failed messages, message processing metrics provide insights into system performance, identify bottlenecks, and help optimize data flow.

Unified integration approach benefits

The unified integration approach offers a streamlined solution for addressing the challenges of peer-to-peer integrations. This platform acts as a central hub for data routing, transformation, and orchestration, enabling seamless connectivity and efficient data exchange between the integrated systems. Benefits of the unified integration approach include:

- Simplified integration management: By centralizing the integration process through a dedicated platform, businesses can more effectively manage and monitor the connections between systems. This simplifies the overall integration management, reduces complexity, and facilitates troubleshooting and maintenance.

- Consistency and control: The centralized integration platform ensures consistency in data exchange and adheres to standardized integration practices. It offers businesses greater control over the flow of data, enabling them to ensure data integrity and security across the composable commerce ecosystem.

- Scalability and adaptability: The centralized integration platform provides a scalable infrastructure that can accommodate the growth and evolving needs of the composable commerce ecosystem. New systems can be easily added to the centralized hub, minimizing the integration efforts required for each individual system.

- Vendor-agnostic integration: Another significant benefit is the reduced risk of vendor lock-in. By using a centralized integration platform, businesses can decouple themselves from relying solely on connectors provided by specific vendors. Instead, they gain the flexibility to integrate with a variety of systems and PBCs, regardless of the vendor’s offerings.

Introducing the Kafka for composable commerce starter kit

The Proof of Concept (POC) project for the Kafka for Composable Commerce Starter Kit aimed to validate the effectiveness and feasibility of the unified integration approach in the context of composable commerce solutions. The project focused on integrating four widely adopted systems: commercetools, Algolia, Cloudinary and FluentCommerce. Each system played a crucial role in the overall composable commerce ecosystem, and the unified approach served as the foundation for seamless data exchange, real-time updates, and efficient processing across these integrated systems.

By integrating commercetools, Algolia, Cloudinary, and FluentCommerce, the project showcased how real-time updates, efficient data processing and streamlined communication can create a cohesive and agile digital commerce environment. The use of Kafka as the messaging platform and the implementation of the domain generic model provided a solid foundation for seamless data exchange and enabled businesses to build a scalable, flexible and future-proof composable commerce solution. The successful validation of these concepts in the POC project paved the way for the potential adoption and implementation of the unified integration approach in larger and more complex digital commerce environments.

Messaging platform reference implementation overview

In the Proof of Concept (POC) phase, the messaging platform was successfully implemented to seamlessly connect and orchestrate four essential systems: commercetools, Algolia, Cloudinary, and FluentCommerce. By leveraging the power of Kafka Connectors and Kafka Streams, a robust and agile composable commerce ecosystem was achieved that empowered businesses with real-time updates, efficient data processing and streamlined communication.

Embrace the unified integration approach for future-proof composable commerce solutions

Are you ready to unlock the full potential of your digital commerce ecosystem? We invite you to explore the power of our Kafka for Composable Commerce Starter Kit. It offers a cutting-edge solution designed to revolutionize the way you manage, integrate, and scale your composable commerce systems.

Embrace the unified integration approach leveraging Apache Kafka and witness the transformation of your composable commerce ecosystem. Break free from the limitations of traditional peer-to-peer integrations and experience a future-proof, scalable solution.

Join us on this journey toward a new era of digital commerce. Contact us today to learn more about how we can revolutionize your business and create a seamless, scalable, and customer-centric commerce ecosystem.

Appendix

Integrated systems description:

- commercetools: commercetools, a pioneering headless commerce platform that coined the term “headless commerce,” offers strong MACH support, enables rapid customization of the shopping experience, facilitates A/B testing, optimizes the customer journey, and simplified data management through their advanced Merchant Center.

- Algolia: Algolia’s powerful search capabilities improved customer experiences with rapid, precise results. Integrating Algolia enabled efficient, real-time product indexing, ensuring swift and easy product searches. Its user-friendly API, quick deployment, scalability, high performance, and comprehensive merchandising appeal to time-sensitive businesses.

- Cloudinary: Cloudinary, a robust cloud-based digital asset management (DAM) platform, utilizes AI, automation, and advanced image processing for fast, impeccable scalable visual experiences. It simplifies media content management, serving as a DAM system, ensuring efficient storage, retrieval, and optimization of assets across the commerce ecosystem.

- FluentCommerce: FluentCommerce functioned as an order management system (OMS) and Inventory Management, optimizing orders, inventory, and fulfillment. This ensured a smooth customer journey from purchase to delivery. Integrated with commercetools, it unified order management, encompassing post-purchase tasks and returns across channels.

LEARN MORE

commercetools Composable Commerce Starter Kit

Tags

You might also like

Once upon a time, your enterprise product catalog was a backend concern. A necessary system of record. Something teams updated quietly while the real “experience” work happened elsewhere. Today, that separation no longer exists. Research shows that 87% of shoppers rate product data as “extremely...

Modern enterprises increasingly rely on deep learning to power mission-critical workflows such as global demand forecasting, inventory optimization, supply chain prediction, video-based defect detection, and financial risk modeling. These workloads demonstrate rapidly increasing GPU requirements, g...

Predictive analytics is undergoing a major transformation. This AI demand forecasting model comparison reveals significant performance gaps between traditional and modern approaches. Demand forecasting has long guided decisions in retail and manufacturing, but today’s data volumes and volatility ar...

Agentic commerce is in the midst of a defining moment. Instead of a customer navigating a checkout flow, AI shopping agents can now autonomously purchase goods, renew subscriptions, or restock supplies, executing payments entirely on the customer’s behalf through agentic payments protocols. It’s...

You know the feeling: you walk into a store only to find out that the product you saw online is out of stock! This is one of the most common and problematic experiences for customers who shop multichannel retail. The problem for you? Disconnected sales channels, lost income, frustrated custom...

The buzzword “composable commerce” has dominated digital strategy conversations since Gartner popularized the term in 2020. But behind the marketing hype lies a longstanding, proven practice of integrating specialized, best-of-breed technology components into a flexible and scalable ecosystem....

For many businesses, moving away from familiar but inherently unadaptable legacy suites is challenging. However, eliminating this technical debt one step at a time can bolster your confidence. The best starting point is transitioning from a monolithic CMS to a headless CMS. This shift to a modern c...